Just as there are many ways to read data, we have just as many ways to write data.

- Writing Data

1. Writing Data

- Writing data to Parquet files

ใช้ตัวอย่างไฟล์ json จาก Read data in JSON format

# Required for StructField, StringType, IntegerType, etc.

from pyspark.sql.types import *

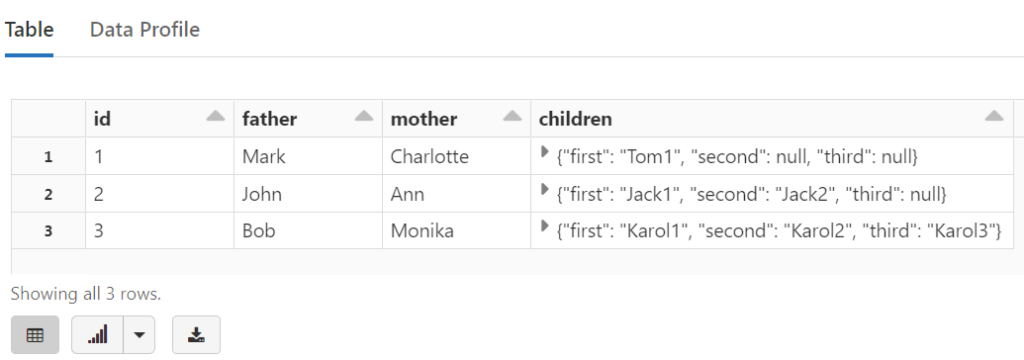

jsonSchema = StructType([

StructField("id", LongType(), True),

StructField("father", StringType(), True),

StructField("mother", StringType(), True),

StructField("children", StructType([

StructField("first", StringType(), True),

StructField("second", StringType(), True),

StructField("third", StringType(), True)

]), True),

])

jsonFile = "/mnt/training/sample2.json"

jsonDF = (spark.read

.schema(jsonSchema)

.json(jsonFile)

)

display(jsonDF)

Now that we have a DataFrame, we can write it back out as Parquet files or other various formats.

parquetFile = "/mnt/training/family.parquet"

print("Output location: " + parquetFile)

(jsonDF.write # Our DataFrameWriter

.option("compression", "snappy") # One of none, snappy, gzip, and lzo

.mode("overwrite") # Replace existing files

.parquet(parquetFile) # Write DataFrame to Parquet files

)

Now that the file has been written out, we can see it in the DBFS:

%fs ls /mnt/training/family.parquet

display(dbutils.fs.ls(parquetFile))

And lastly we can read that same parquet file back in and display the results:

display(spark.read.parquet(parquetFile))

Writing to CSV

ในตัวอย่างนี้ถ้า Write เป็น csv จะ error เพราะ CSV data source does not support struct<first:string,second:string,third:string> data type.

csvFile = "/mnt/training/family.csv"

print("Output location: " + csvFile)

(jsonDF.write # Our DataFrameWriter

.mode("overwrite") # Replace existing files

.csv(csvFile) # Write DataFrame to Parquet files

)

ถ้าเป็น json นี้ Write เป็น csv จะ error เพราะ CSV data source does not support array<string> data type.

# Required for StructField, StringType, IntegerType, etc.

from pyspark.sql.types import *

jsonSchema = StructType([

StructField("id", LongType(), True),

StructField("father", StringType(), True),

StructField("mother", StringType(), True),

StructField("children", ArrayType(StringType()), True)

])